Published: 10 Jan 2023 by Nippon Kogyo Shuppan.

Please see below for the article.

No-code machine vision IDE “ImagePro” enables practical leveled AI ImageProcessing application

Author

*Takafumi Yano

President and Representative Director, RUTILEA Inc.

Yuichi Oshima

Head of ImagePro development, RUTILEA Inc.

Kazuki Namita

Project Manager, RUTILEA Division, RUTILEA Inc.

Summary

In recent years, machine vision using deep learning has begun to be used in manufacturing. In particular, complex programming is often required for inspection processes, as deep learning needs to be combined with conventional image processing techniques. Therefore, this paper introduces products that enable deep learning and image processing to be written in no-code and examples of their applications.

Introduction

Deep learning-based segmentation, reading and classification have long been applied to industrial machine vision. One of the strengths of deep learning is that features can be learnt from data, thereby increasing detection accuracy. This has led to applications such as the inspection of surface appearance, which has been difficult to solve with image processing methods that use conventionally used procedures and feature engineering (hereafter referred to as rule-based image processing).

However, in positioning and measurement, which are applications of machine vision, matching and image measurement using local feature points are still effective methods. For example, Fig. 1 shows an example of inspection of a mounted printed circuit board. Image measurement is effective for measuring the size of a circle and the length of a pin, while deep learning is effective in recognising part varieties and detecting abnormalities. Thus, both deep learning and image measurement are required for a single workpiece.

Challenges in applying deep learning

Thus, when deep learning is used, rule-based image processing and deep learning need to be combined, but the cost of this implementation is generally high. Therefore, the return on investment after implementation is reduced by the following three points. First, implementation costs are high because the number of engineers who can code is limited; second, testing is complicated and the cost of ensuring reliability is high because of the complex implementation; and finally, the number of personnel who can perform maintenance after implementation is limited, resulting in a high level of personalisation.

Figure 1: Examples of the need to use rule-based and deep learning together

Software introduction

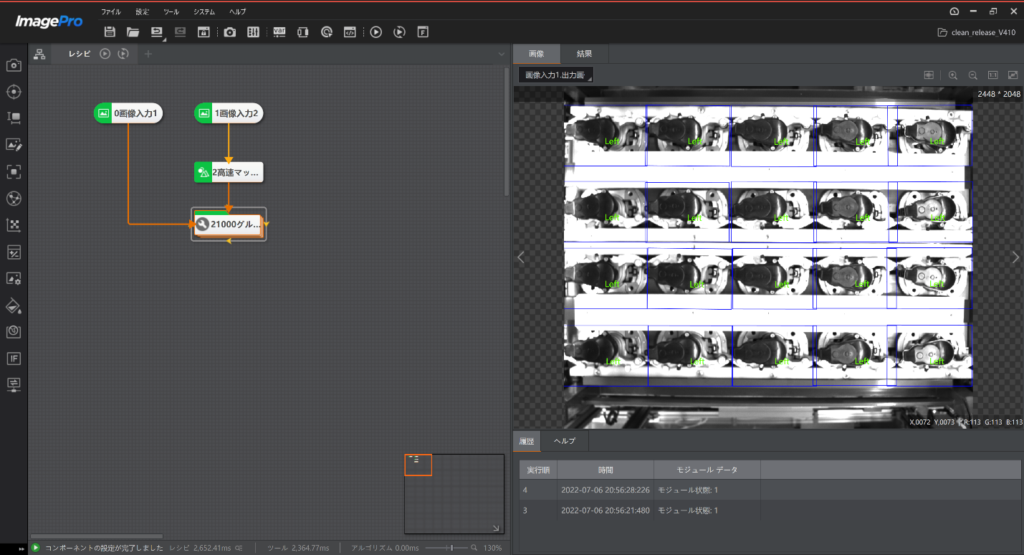

We therefore propose Image Pro, a software that solves these problems through no-code programming. The main screen of this software (Fig. 2) provides a function to create programmes in no-code. Hereafter, no-code created programmes are referred to as recipes. The tool collection (A) contains various AI, rule-based and other tools by category (Table 1), which are dragged and dropped into the programme editing area (B) in the centre of the screen. The arranged tools can be connected to each other with arrows to form a processing flow. When a placed tool is selected, a preview of the results of the completed processing can be displayed (D). The output history (E) and time required for processing (F) can also be checked, making it easy to debug and identify bottlenecks to reduce processing time. More advanced functions include global variables, original scripts, management of external devices, lighting controllers and multiple cameras, and design of execution screens.

The next section describes examples of how recipes can be created on Image Pro and used to solve real-world problems in actual cases.

Figure 2 ImagePro main screen

(A) The tool collection covers image processing and operating tools such as image acquisition, localisation, measurement, recognition, deep learning, calibration, alignment, image calculation, colour processing, defect detection, logic tools and communication units. Depending on what you want to do, add the necessary tools and create a recipe.

(B) Area for designing recipes. Each tool is connected by lines to build a flow. Subsequent tools can take over the results of the previous tool. The colour of each tool indicates the result (OK or NG). It is also possible to switch between several recipes in tabs.

(C) In addition to saving and expanding recipes, frequently used common tools such as camera settings, external device settings, global variables, global triggers, global scripts and screen creation can be accessed with a single click.

(d) Preview display of the processing results of the selected tool. The resulting images of multiple tools can be displayed on top of each other.

(E) Displays the output results of the selected tool, its history and simple instructions for using the tool.

(F) Displays the 'processing time' of the entire recipe, selected tools and algorithms. By checking here, it is easy to find bottlenecks in the recipe that are taking a lot of processing time.

Table 1 Typical tools

|

Function Category |

Number of Tools |

Typical Tools |

|

Image Input |

5 |

Camera input, image file input, lighting control |

|

Positioning |

20 |

Matching, circle/line/short form detection, BLOB analysis, peak detection |

|

Measurement |

12 |

Line/circle/point-to-point distance measurement, angle measurement, histogram measurement |

|

Reading |

6 |

Barcode/QR code reading, rule-based OCR, deep learning OCR |

|

Deep Learning |

6 |

Image classification, anomaly detection, object detection, character detection, image search, supervised/unsupervised segmentation (All of these support both CPU and GPU) |

|

Calibration |

8 |

Calibration board, distortion correction, real size conversion, coordinate conversion |

|

Position Alignment |

4 |

Camera mapping, calculation of single point/point cloud displacement, line position correction |

|

Image Processing |

18 |

Binarization, morphology operations, image filters, image composition, affine transformations, panorama merging |

|

Color Processing |

4 |

Color extraction/measurement/transformation/recognition |

|

Defect Detection |

10 |

Edge defect detection, contrast defect detection (machine learning) |

|

Logical Operations |

15 |

IF, branching, logical operations, variable type conversion, variable calculation, character comparison, own scripts (C#), protocol analysis/conversion |

|

External Connection |

6 |

Data transmission/reception, camera I/O output, management of external devices, data queue management |

Solution example (automation of pre-shipment inspection)

The target workpiece (Fig. 3 right) is an automobile brake component. The next process is automatic assembly by a robot, so the condition for delivery is that all parts have the same orientation before shipment. In order to perform this check automatically, a method for estimating the orientation of the workpiece using a rule-based method had been studied, but the accuracy deteriorated due to changes in the shooting environment caused by changes in sunlight and weather conditions. Therefore, an improvement in accuracy using deep learning was sought.

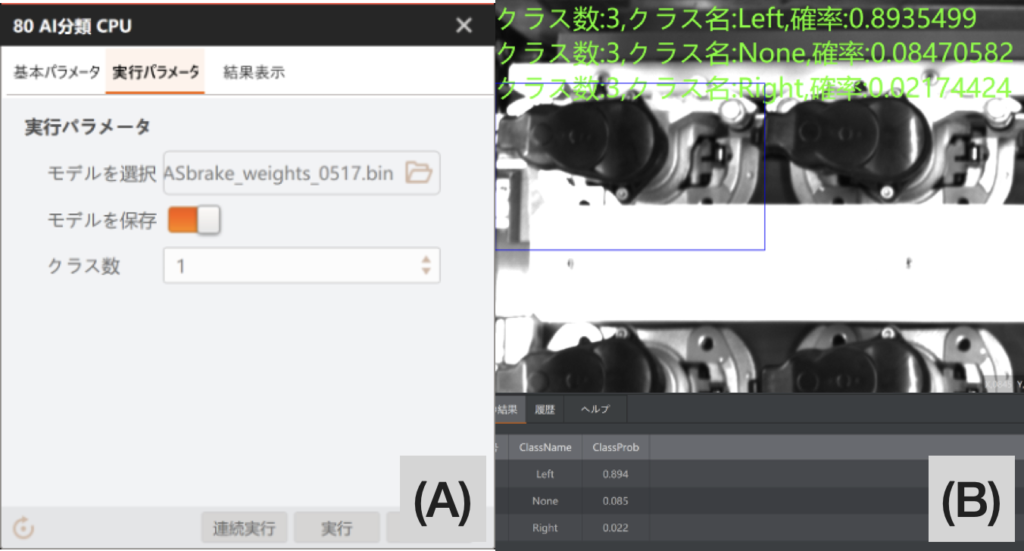

Fig. 3 shows the actual Image Pro image processing recipe created. In this example, the image processing recipe is simplified by grouping common processes and using a for loop in order to process each part to be inspected. Within the loop, deep learning of image classification is used to determine the presence or absence and right-left orientation of the component. Figure 4 shows the detail screen of the AI classifier. This detail screen allows the selection of the deep learning model data (learned parameters) to be used for classification and the results after the execution of the deep learning. Deep learning can be selected between GPU mode and CPU mode, but in this project, CPU was used because the tact time is relatively long and the model is lightweight with a small number of parameters to be executed. Learning of the model can likewise be performed using the no-code interface.

The orientation of each part is entered into the module of if statements, and 'normal' is output if the orientations match, and 'abnormal' if the orientations are different or a part is missing.

The conventional method using template matching had an orientation recognition accuracy of around 60%, but by introducing deep learning, an execution accuracy of 99.8% could be achieved. In addition, even if the target workpiece is new, no-code programming allows settings to be made at the production site, and the response at the production site has been positive.

Figure 3: Recipes and inspections

Figure 3: Recipes and inspections In the image on the right, the four vertical and five horizontal workpieces are the regions of interest. In each of these regions, deep learning is applied, the output result flags "RIGHT, LEFT, NONE" are output and converted by the module that converts them from strings to numbers, and logical operations are performed on the results.

Figure 4 (A) AI training settings dialogue and (B) deep learning output results.

Figure 4 (A) AI training settings dialogue and (B) deep learning output results.The selection of a deep learning model can be easily done by setting up a pre-trained model and inputting a test image of its recognition.

Conclusion

In this paper, we raised the need to use both rule-based methods and deep learning in machine vision and proposed ImagePro as a solution. We have also presented an example of its actual application. Finally, the effectiveness of ImagePro is summarised from three perspectives.

Cost reduction through standardisation and speed-up of development using no-code

Compared to programming using Python and other languages, ImagePro enables visual confirmation of the image being processed, which reduces the time required for debugging and speeds up development. The system can also unify programming methods, which allows standardisation of code and reduces the need for individual development staff.

Effectiveness of combining rule-based and deep learning

First, as in the aforementioned case study, it is effective for improving the accuracy limit of image processing such as template matching by using deep learning. Secondly, as an algorithm often used in visual inspection, it is possible to determine whether a defect found by segmentation is really a defect or just dust by image classification, and then measure the area of the defect using the rule base and make a decision based on the area. In this way, it is possible to use objective rules to make judgements such as good or bad for the faulty areas found by deep learning and the results of detection. In this way, the use of rules can increase the explainability of the judgement results.

High connectivity through support for generic communication standards

System integration is easy as the system supports Ethernet/IP, digital IO, serial communication, TCP/UDP communication, Modbus, etc. It is also compatible with PLCs from many manufacturers and can be linked to various robots and machines, making it possible to introduce it to actual production lines.